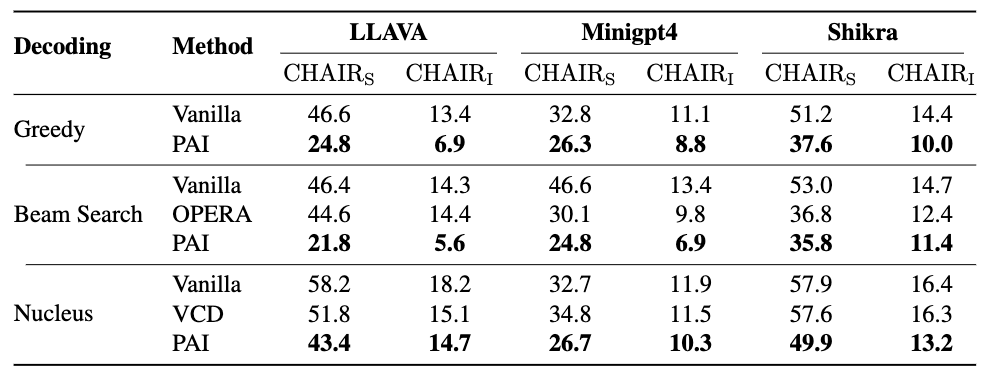

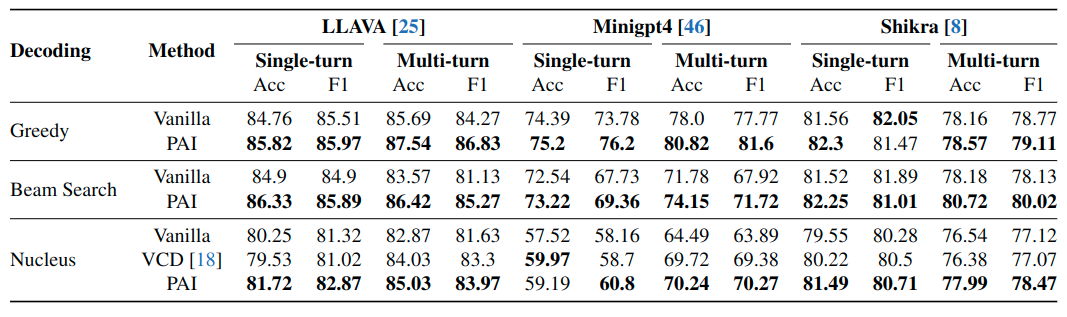

Large Vision-Language Models (LVLMs) align image features to the input of Large Language Models (LLMs), enhancing multi-modal reasoning and knowledge utilization capabilities. However, the disparity in scale between models of different modalities has resulted in LLMs assuming a predominant role in multimodal comprehension. This imbalance in model integration can lead to instances of hallucinatory outputs. In particular, LVLMs may generate descriptions that persist in the absence of visual input, suggesting that these narratives are disproportionately influenced by the textual context. We refer to this phenomenon as ``text inertia.'' To counteract this issue, we introduce a training-free algorithm designed to find an equilibrium between image comprehension and language inference. Specifically, we involve adjusting and amplifying the attention weights assigned to image tokens, thereby granting greater prominence to visual elements. Meanwhile, we the logits of multimodal inputs from the model logits of pure text input, which can let model not biased towards only LLM. By enhancing images tokens and reducing the stubborn output of LLM, we can let LVLM pay more attention to images, towards alleviating text inertia and reducing the hallucination in LVLMs. Our extensive experiments shows that this method substantially reduces the frequency of hallucinatory outputs in various LVLMs in terms of different metrics.

@article{liu2024paying,

title={Paying more attention to image: A training-free method for alleviating hallucination in lvlms},

author={Liu, Shi and Zheng, Kecheng and Chen, Wei},

journal={arXiv preprint arXiv:2407.21771},

year={2024}

}